Abstract

This thesis proposes a study on recognizing American Sign Language (ASL) for the hearing-impaired using available video datasets, serving as a foundation for future development of Vietnamese Sign Language recognition. The system aims for real-time application as a teaching tool for deaf children and to facilitate communication in public spaces such as train stations, hospitals, and airports. The research is divided into two main parts: static gesture recognition and continuous gesture recognition in ASL. Key contributions include a foundational study on sign language recognition utilizing geometric modeling for feature extraction, with experiments conducted using various machine learning methods to evaluate recognition success rates. Additionally, the thesis explores video segmentation techniques to extract key frames for real-time ASL alphabet character recognition. For continuous gestures, the study investigates methods for capturing joint coordinates, focusing on feature analysis and recognition performance. This work aims to apply technology in a practical context, enhancing communication between the hearing-impaired and the hearing population, thus promoting better integration within the community.

Introduction

According to statistics from the World Health Organization (WHO), approximately 15% of the global population, equating to over 1 billion people, live with some form of disability. Reports from WHO and the World Bank indicate that individuals with disabilities experience lower development indicators compared to those without disabilities, primarily due to limited access to essential services and societal stigma. In Vietnam, the Ministry of Labor, Invalids and Social Affairs reports that the country has a relatively high percentage of people with disabilities in the Asia-Pacific region, with around 7.8% to 15% of the population classified as disabled, and approximately 13% of these individuals are hearing impaired. Hearing-impaired individuals have disabilities affecting their auditory capabilities, which may arise from congenital conditions or accidents. They utilize sign language, a form of communication involving hand gestures and facial expressions to convey meaning instead of relying on sound. While this language is used within the deaf community, it is not widely recognized in mainstream communication, creating a significant barrier between hearing-impaired individuals and the general population. Currently, people with disabilities are receiving increased attention from society, and it is essential for them to access education, vocational training, and integration into the workforce. As hearing-impaired individuals cannot verbally communicate, information exchange is often expressed through gestures. Sign language has developed organically, influenced by the customs and habits of different regions and countries, resulting in significant variations. Nations have focused on establishing their own sign languages to create a unified understanding. Given this context, I have chosen the topic “Sign Language Recognition to Assist Communication with Hearing-Impaired Individuals” for my thesis, with the aim of contributing to research in sign language recognition techniques and applying these advancements to facilitate the integration of hearing-impaired individuals into the community.

Proposed Method

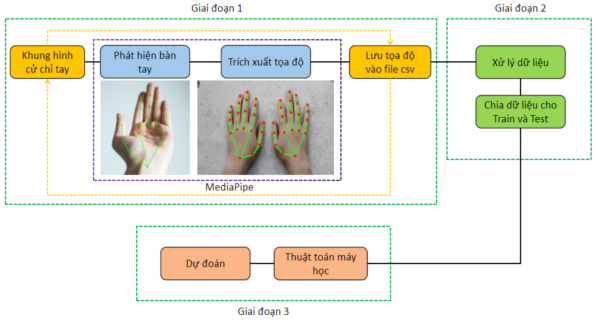

The initial study on sign language recognition using image processing models consists of three stages: preprocessing, feature extraction, and classification, as illustrated in Figure 2.24. The preprocessing stage involves two sub-stages: image filtering using color filters and hand segmentation to address issues related to environmental dependency and sensor variability. In the second stage, features extracted from the hand include key landmarks. The obtained coordinate values form a characteristic skeletal framework of the hand and are normalized according to a defined rule. Finally, an LSTM machine learning model is employed to classify hand gestures. The research results are also tested directly on data captured from a camera with a resolution of 640 x 480 in real-time.

When recognition begins, the camera will be set up to capture data and perform image filtering to reduce noise and smooth the images. MediaPipe is then used to extract data, specifically the coordinates of the key landmarks of the body. The acquired data consists of features in the (x, y, z) coordinate system. The real-valued coordinates will be converted to integer data (x, y), while the depth coordinate z will not be utilized in this study. The data will be labeled if in training mode or passed to testing mode to produce recognition results if in testing mode.

Results

Displaying Aquaculture Information: This section shows data from the sensors about the aquaculture system. It also displays data for different fish species to facilitate comparison for users. Additionally, the system will issue an alert if data is not received within the specified time limit (60 seconds).

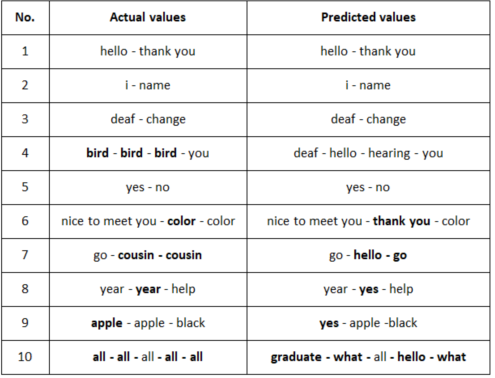

The experimental process for testing gesture recognition is continuously conducted directly with the computer camera. After capturing frames from the camera, MediaPipe detects the hands and returns 44 landmark coordinates, storing data for 16 consecutive frames for normalization. Frames where hand landmarks are not detected are disregarded and do not count as a valid frame. Once sufficient data from 16 frames is collected, the acquired data is compared with the training data, and the comparison results are returned. The implementation of recognition on continuous gestures yields fairly accurate results; however, there are still significant errors due to various factors, such as discrepancies between the training dataset (videos) and recognition via the camera, angle variations, the speed of gesture execution, and other issues.

Conclusions

This thesis successfully achieved its objectives in recognizing American Sign Language (ASL), focusing on both static and continuous gestures. Key accomplishments include a comprehensive overview of sign language recognition systems, the application of geometric feature extraction methods, and the development of two datasets for testing. The study utilized MediaPipe for hand landmark detection and explored LSTM models for recognition. Although the current success rate is limited, the findings lay a solid foundation for future research in both ASL and Vietnamese sign language recognition.

References

- P. T. Hai et al., "Automatic feature extraction for Vietnamese sign language recognition using support vector machine," in 2018 2nd International Conference on Recent Advances in Signal Processing, Telecommunications & Computing (SigTelCom), 2018, IEEE.

- L. T. Phi et al., "A glove-based gesture recognition system for Vietnamese sign language," in Control, Automation and Systems (ICCAS), 2015 15th International Conference on, 2015, IEEE.

- Y. F. Admasu and K. Raimond, "Ethiopian sign language recognition using Artificial Neural Network," in Intelligent Systems Design and Applications (ISDA), 2010 10th International Conference on, 2010, IEEE.

- V. Bazarevsky and F. Zhang, "On-Device, Real-Time Hand Tracking with MediaPipe," Google AI Blog, Aug. 2019. [Online]. Available: https://ai.googleblog.com/2019/08/on-device-real-time-hand-tracking-with.html.

- J. Brownlee, "A Gentle Introduction to Cross-Entropy for Machine Learning," 2019. [Online]. Available: https://machinelearningmastery.com/cross-entropy-for-machine-learning/.