Abstract

Face recognition is applied in many fields, such as security systems, biometric systems, attendance, etc. Many face recognition techniques have been researched and developed, and deep learning techniques give outstanding accuracy. In this trend, with the development of education in Vietnam, the number of students at universities is increasing, leading to difficult and complicated management. Currently, the inspection and assessment of student quality have not been done in the best way because most lecturers often ignore the student attendance form and let students be absent from classes. This topic proposes to help lecturers more effectively check and evaluate students' attendance. The main function of the program is to take attendance for students and prevent forgery by using printed photos and video re-play of registered users. Face recognition method by applying the RetinaFace model with a pre-trained convolutional deep learning neural network to encode the face and then store the face data for convenience data extraction and later identification. Face-spoofing with MiniFASNET. The application uses Qt Designer to design the user interface, making interoperability with the program more intuitive. The evaluation of the proposed model's experimental results is carried out in the context of detecting fake face logins, and these results are compared against existing models. The test results show the feasibility of the proposed model at 82–86%, showing that the above-mentioned combined method obtains results with high accuracy. The identification results are based on a sample of photos collected from 10 different male and female students and can clearly recognize that the student is using printed paper or video playback. With this result, the study provides a method that can improve the performance of facial recognition technology.

Introduction

Biometric identification is defined as the process of recognizing an individual based on distinguishing physiological and/or behavioral characteristics (biometric recognition) [1][2]. It links an individual to a previously established identity based on who they are or what they do. Since certain traits are unique to each person, biometric identification is considered more reliable and robust against attacks compared to other identification techniques. Typically, several applications—especially those requiring high security—utilize biometric modalities such as iris patterns, fingerprints, and facial features as identification tools. However, there is a rapidly evolving threat landscape aimed at circumventing these robust measures. Attackers can now replicate a user's fingerprint to gain unauthorized access, and iris scanners are often prohibitively expensive and inaccessible for most organizations and the general public. Consequently, 2D facial biometric detection using standard cameras is the most suitable authentication technique for several reasons. First, it requires simple hardware for acquisition, making it more cost-effective and user-friendly. Second, it demands significantly less computational effort compared to more complex methods like 3D image analysis. Therefore, this study focuses specifically on 2D facial recognition rather than other biometric verification methods [3].

Proposed Method

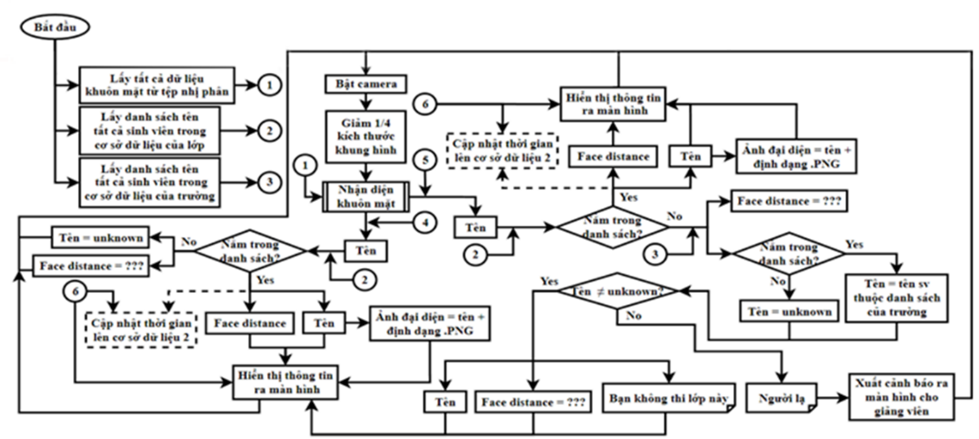

With the goal of building the model as mentioned, the proposed model is presented in Figure 1.

Student Image Enrollment: Students wishing to participate in attendance must first register their faces by uploading an image of their personal face for the program to encode. This study includes a sample of 10 different students, comprised of 5 male and 5 female students, who provide images for recognition.

Parameter Adjustment: According to the original Face Recognition algorithm by Adam Geitgey, the maximum allowable face distance for matching two faces (Face Match Threshold or Tolerance) can be adjusted to a level that is neither too high nor too low. This adjustment ensures accurate face recognition while minimizing interference from faces with excessively high face distances during the recognition process.

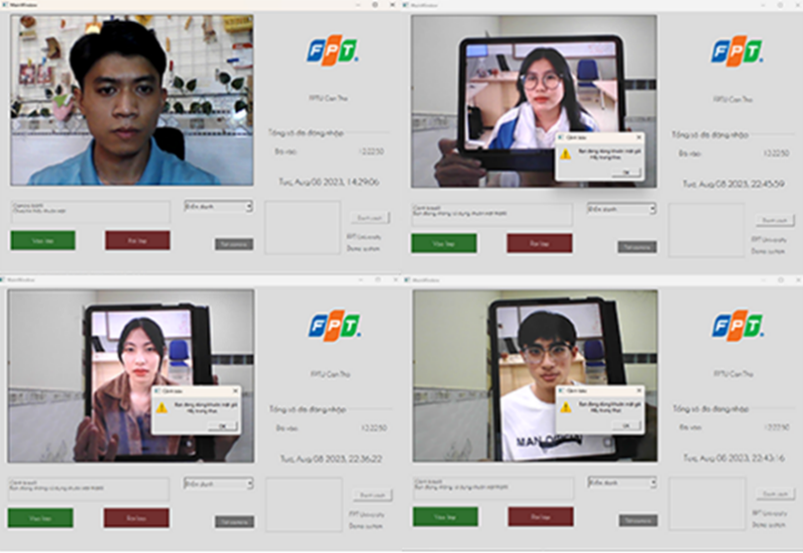

The black camera frame on the interface is where the video from the camera is displayed. Instead of using the OpenCV window for face recognition in computer vision applications, which can lead to a disjointed user experience, the face recognition video frame has been integrated into the attendance interface. This is achieved by converting the video frames from OpenCV into the QImage format used by Qt Designer, which is then rendered on the interface (Figure 2).

Results

The image below shows the recognition of some of the faces in all the faces that have been included for encoding in Figure 3.

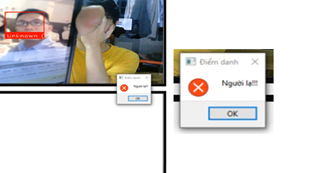

Using an image of a student who is not in the dataset for attendance and results are achieved as shown in Figure 4.

Conclusions

In the design project of creating a fake attendance and recognition application, the topic focuses on the successful operation of the face recognition algorithm, fake recognition adjusts the values to achieve good recognition results, and the application to the practical program is the attendance of interface design. The topic uses Qt Designer to design user interfaces using the Python programming language coded by the Visual Studio Code application. The results of facial recognition for the faces included in the training show the stability of the algorithm, although it was a little noisy at first, but after a few times of parameter editing, it was basically stable. Because the camera's resolution is not too high, as well as the camera does not have autofocus, the resulting image is sometimes blurry, difficult to identify, which can be a secondary cause of interference.

References

- C. Of và T. H. E. Acm, “B IOMETRIC”, vol 43, số p.h 2, 2000, doi: 10.1145/328236.328110.

- 2. H. Y. S. Lin và Y. W. Su, “Convolutional neural networks for face anti-spoofing and liveness detection”, trong 2019 6th International Conference on Systems and Informatics, ICSAI 2019, Institute of Electrical and Electronics Engineers Inc., 2019, tr 1233–1237. doi: 10.1109/ICSAI48974.2019.9010495.

- A. Mahmoud, K. Saad, A. Saad, và K. Mahmoud, “Anti-spoofing using challenge-response user interaction Anti-spoofing using challenge-response user interaction MLA Citation”. [Online]. Available at: https://fount.aucegypt.edu/etds/1213

- P. Anthony, B. Ay, và G. Aydin, “A review of face anti-spoofing methods for face recognition systems”, 2021 Int. Conf. Innov. Intell. Syst. Appl. INISTA 2021 - Proc., số p.h October 2022, 2021, doi: 10.1109/INISTA52262.2021.9548404.

- National Institute on Aging (NIA). (2022). What Happens to the Brain in Alzheimer's Disease. Retrieved from https://www.nia.nih.gov/health/alzheimers-causes-and-risk-factors/what-happens-brain-alzheimers-disease. [Accessed: 11/07/2023] S. Chakraborty và D. Das, “An Overview of Face Liveness Detection”, Int. J. Inf. Theory, Vol 3, No. P.H 2, pp. 11–25, April 2014, doi: 10.5121/ijit.2014.3202.